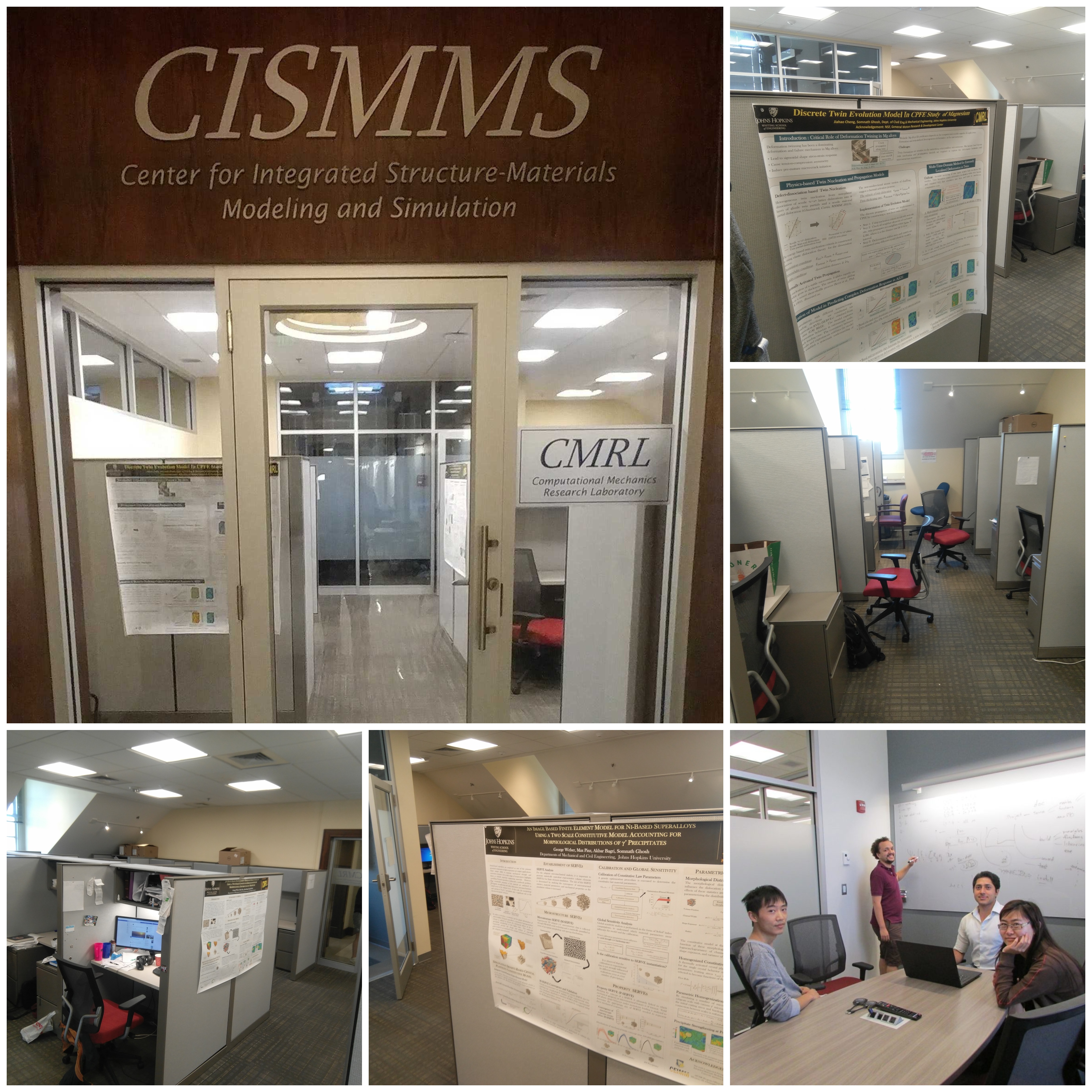

CMRL Lab – The cradle of ideas and innovations

MARCC (http://marcc.jhu.edu/)

A common computing source for the participants of this proposal will be Maryland Advanced Research Computing Center (MARCC). MARCC is a shared computing facility located on the campus of Johns Hopkins University and funded by a State of Maryland grant to Johns Hopkins University through IDIES. MARCC is jointly managed by Johns Hopkins University and the University of Maryland College Park. The mission of the Maryland Advanced Research Computing Center (MARCC) is to enable research, creative undertakings, and learning that involve and rely on the use and development of advanced computing. MARCC manages high performance computing, highly reliable data storage, and provides outstanding collaborative scientific support to empower computational research, scholarship, and innovation. The co-PI J.E. Combariza is the Director of MARCC.

MARCC currently has the following hardware installed.

- Bluecrab is the main cluster at MARCC with approximately 22,000 cores and a combined theoretical performance of over 1.12 PFLOPs. The compute nodes are a combination of Intel Ivy Bridge, Haswell, and Broadwell processors and Nvidia K80 GPUS connected via FDR-14 Infiniband interconnects. It also features two types of storage: 2 PB Lustre (Terascala) and 15 PB ZFS/Linux.

- The standard compute nodes contain 2 Intel Xeon E5-2680v3 (Haswell) processors, with 12 cores and 128 GB DDR4, 2.5 GHz (Marked TDP frequency) or 2.1GHz AVX base frequency. Each node has a theoretical speed of 960 GFlop/s.

- The large memory nodes are Dell PowerEdge R920 servers with quad Intel “Ivy Bridge” Xeon E7-8857v2, (3.0GHz, 12 core, 30MB, 130W). Each node has 1024 GB RAM

- The GPU nodes are Dell PowerEdge R730 servers with dual Intel Haswell Xeon E5-2680v3 (12 core, 2.5 GHz, 120W), 128 GB of 2133 MHz DDR4 RAM. (AVX frequency: 2.1GHz) and two Nvidia K80s per node.

- The FDR-14 Infiniband topology is 2:1 with 56 gbps bandwidth. The Lustre file system provides an aggregate bandwidth od 25 GBps (read) and 20 GBps (write).

In addition to MARCC, the PI and Co-PI’s have the following computing facilities.

Somnath Ghosh: The PI directs the Computational Mechanics Research Laboratory (CMRL) at the Johns Hopkins University equipped with various computing equipment, e.g. (i) Homewood High Performance Cluster, (ii) Graphics Processor Laboratory (GPL), (iii) Bloomberg 156 Data Center and (iv) Linux Workstations. Descriptions of some of these facilities are given below. A host of commercial and in-house FEM software is available in CMRL.

- Homewood High Performance Cluster (HHPC)

The HHPC is a shared computing facility where faculty contributes compute nodes and the Deans of Arts and Sciences and Engineering provide networking hardware and systems administration. The HHPC currently contains over 1300 compute cores that are linked by DDR infiniband. The second generation of the cluster has added another 2,400 cores to the system. These nodes are connected to database components with 1.5 Petabytes of disk storage. An additional cluster with over 2000 compute cores and 4GB of memory per core will come online by the end of September. This will be connected by a high speed QDR Infiniband fabric to allow efficient tightly-coupled parallel computations. A significant fraction of these pooled compute resources (>25%) are owned by Ghosh (PI) and available for the modeling efforts described in the proposal. The HHPC is housed in the Bloomberg 156 Data Center and has high speed interconnects to the 100 Teraflop Graphics Processor Laboratory and Datascope.

- 100 Teraflop Graphics Processor Laboratory (GPL) for Multiscale/Multiphysics Modeling

This compute facility contains 96 C2050 Fermi graphics cards and 12 C2070 Fermi graphics cards. The host nodes are on the new QDR infiniband network for the HHPC as are fileservers with several hundred terabytes of storage. This allows heterogeneous computations both clusters. A large fraction of the 100 Tflop GPL will be available to the Hopkins researchers doing modeling and simulation for the proposed Center of Excellence. Compared to the HHPC, more than an order of magnitude increase in computational performance has been obtained with some codes. Hopkins has recently been named a Center of Excellence by nVidia because of this cluster, the Datascope, and other innovative uses of graphics processing cards.

- Bloomberg 156 Data Center– This 3100 sq. foot facility on the Homewood campus has cooling and electrical infrastructure to support up to 750kVA of computing hardware. It houses the HHPC and 100 TFlop GPL, and hosts the Datascope. The Data-Scope is a computing instrument designed to enable data analysis tasks that were simply not possible before. The system will have an aggregate sequential throughput in excess of 400GBps, and will also contain about 100 GPU cards, providing a substantial floating point processing capability. The Data-Scope will be connected entirely through 10Gbps Ethernet, with an uplink to the OCC 10G connection. There is an ongoing exploratory effort to bring 100G connectivity to JHU. The original Data-Scope consisted of 90 performance and 6 storage servers. Table 1 shows the aggregate properties of the full instrument. The total disk capacity was originally 6.5PB, with 4.3PB in the storage and 2.2PB in the performance layer. By today the system has grown to 10PB total, mostly adding additional storage nodes, the newer ones with 4TB disk drives. The peak aggregate sequential IO performance has been measured to be over 500GB/s, and the peak GPU floating point performance is over 100TF. This compares favorably with other HPC systems. For example, the Oak Ridge Jaguar system, the world’s fastest scientific computer when the Data-Scope was built, had 240GB/s peak IO on its 5PB Spider file system. The total power consumption is only 116kW, a fraction of typical HPC systems, and a factor of 3 better per PB of storage capacity than its predecessor, the GrayWulf. The following table is a summary of the original Data-Scope properties for single servers and for the whole system consisting of Performance (P) and Storage (S) servers.